with Mike McCrea. Best Paper award at SIGGRAPH 2017 (read the paper here)

Introduction

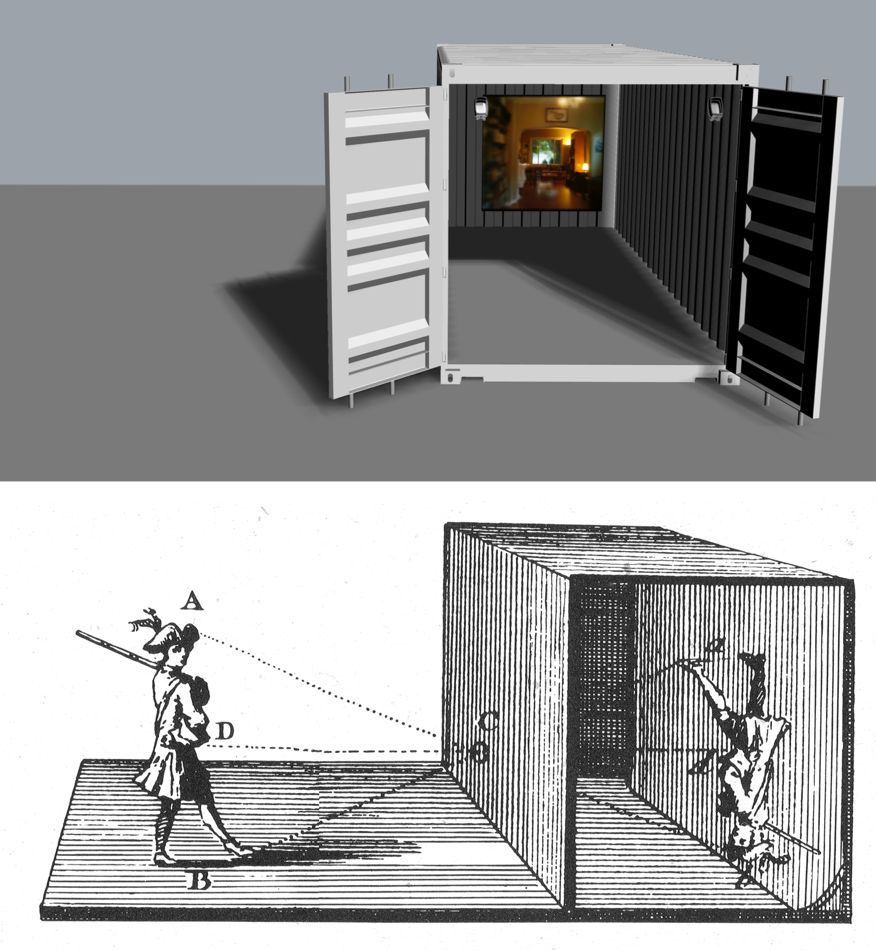

Rover is a mechatronic imaging device inserted into quotidian space, transforming the sights and sounds of the everyday into dreamlike cinematic experience. A kind of machine observer or probe, it knows very little of what it sees.

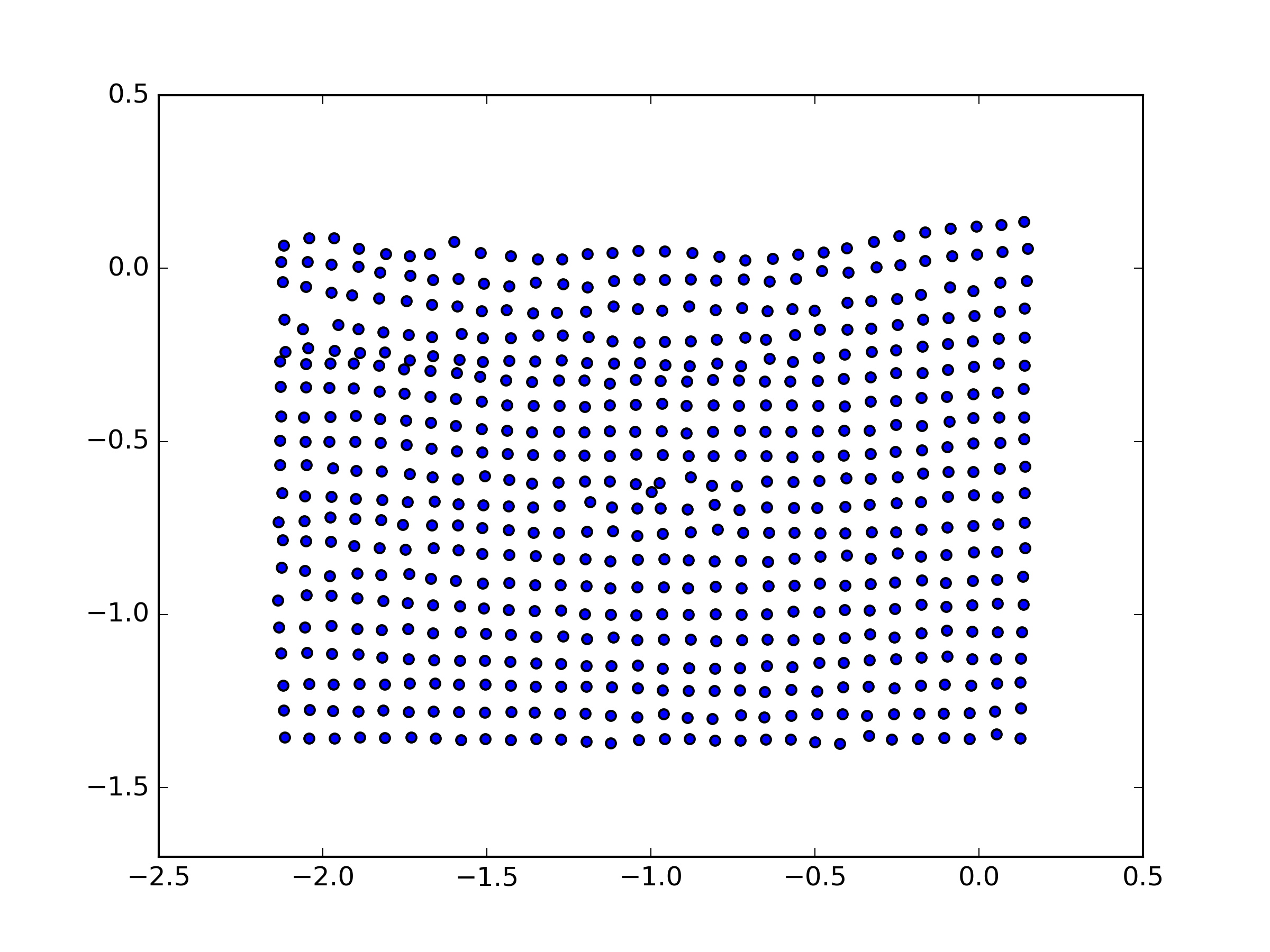

Using computational lightfield capture, Rover records all light incident through a scene. Bounded in a physical sense by the system of motors and belts delimiting its 2-dimensional plane of travel, nonetheless it explores the world before it. It strains outwards from this grid into space it cannot readily inhabit: our space. It records sequential images to document where it is, when it is. Later, through an algorithmic manipulation of those images, we witness its search through a past of imperfect moments, synthesizing dreamlike views of the spaces and scenes it previously inhabited.

While looking, Rover also listens. It records and extracts audio using machine-listening techniques, retrieving sounds we would otherwise dismiss. Just as images are ceaselessly churned by the device, sounds are revisited and reshaped until they are no longer commonplace.

The result is a kind of cinema that follows the logic of dreams: suspended but still mobile, familiar yet infinitely variable in detail. Indeed, the places we visit through Rover’s motility are the kinds of places we find ourselves in dreams: cliffside, seaside, bedside, adrift and unable to return home, or trapped in the corners of those homes.

Process

The imagery gathered for this iteration of Rover was captured with a custom mechatronic light field capture system designed to be portable and scalable according to the framing and depth required for each scene.

By gathering hundreds of images in a structured way, we are able to create a synthetic camera “aperture” which allows us to resynthesize a scene after the fact, re-focusing, obscuring and revealing points of interest in real-time. The result is a non-linear hybrid between photography and video.

In a somewhat analogous process, audio is recorded at the site of each light field capture and analyzed to find events and textures of interest through an audio classification system called Music Information Retrieval. Based on the features discovered in the recordings, sonic moments or textures which may have otherwise gone unnoticed are exposed and recomposed in concert with the visual system.

Some of the techniques and technologies used include:

- Music Information Retrieval for audio classification (using SCMIR by Nick Collins)

- K-means clustering for ordering sound according to self-similarity

- Visual Structure From Motion for gathering images locations and rectifying all images to a common image plane

- Custom software driving the resynthesis of the light field scenes (via OSC from SuperCollider)

- A real-time audio granulation software written in SuperCollider

Exhibition

Rover was presented at the Black Box 2.0 Festival, May 28 — June 7 2015.

One thought on “Rover”

Comments are closed.