Proposal for Currents 2016 // with Mike McCrea

Description | Technique | Installation Plan | Equipment | Previous Iteration | Process

Project Description

Rover is a mechatronic imaging system inserted into quotidian space, transforming the sights and sounds of the everyday into a dreamlike cinematic experience. Through computational light field capture and machine-listening techniques, Rover creates a kind of cinema that follows the logic of dreams: suspended but mobile, familiar yet infinitely variable in detail.

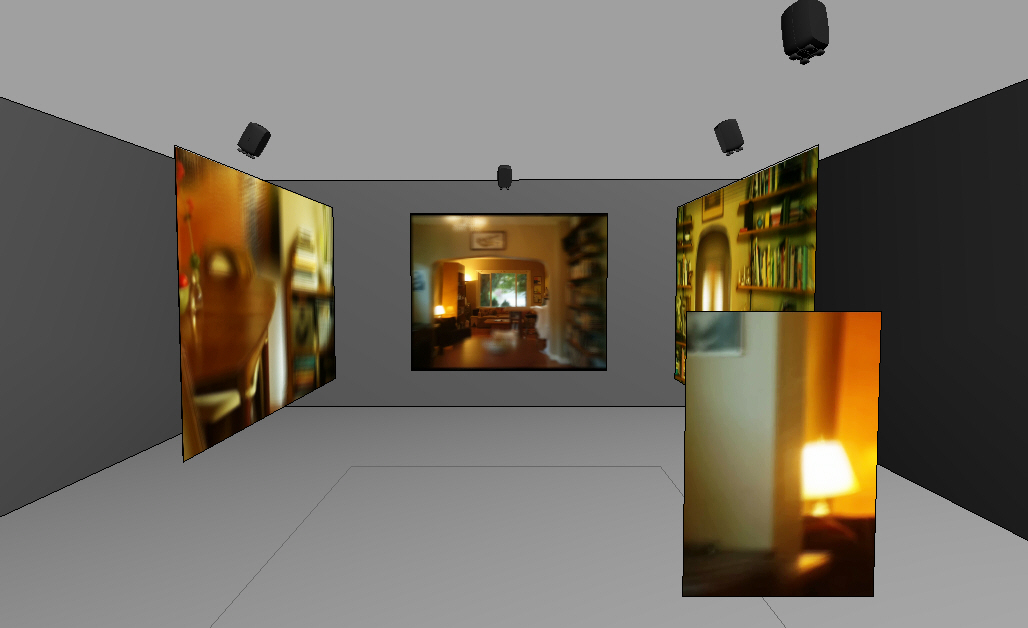

Rover is iterative by nature, and each installation is an exploration of a new site. For Currents 2016, Rover is shown through four sound and image projections arranged to suggest a dwelling, looking both outwards and inwards. Visual and aural scenes are analyzed by computer and shaped into focused vignettes displayed on the “walls” and “windows” of the projection surfaces.

The projection scenario in Rover, along with the painterly quality of its imagery, evokes Rover’s antecedent: the camera obscura. Rather than projecting fixed scenes, Rover produces a shifting image-architecture, enveloping viewers in echoes, reflections, and fleeting moments. This effect is further pronounced by the viewer’s ability to move between and around the projection screens.

Rover’s wandering focus resonates with our own capacity to conjure dreamlike space from fragments of memory. Through Rover’s travels we visit the kinds of places we find ourselves in dreams: cliffside, seaside, bedside, adrift and unable to return home, or trapped in the corners of those homes.

Rover is not composed of videos as we normally understand moving images. To understand how it animates its image, it is useful to understand how it captures them.

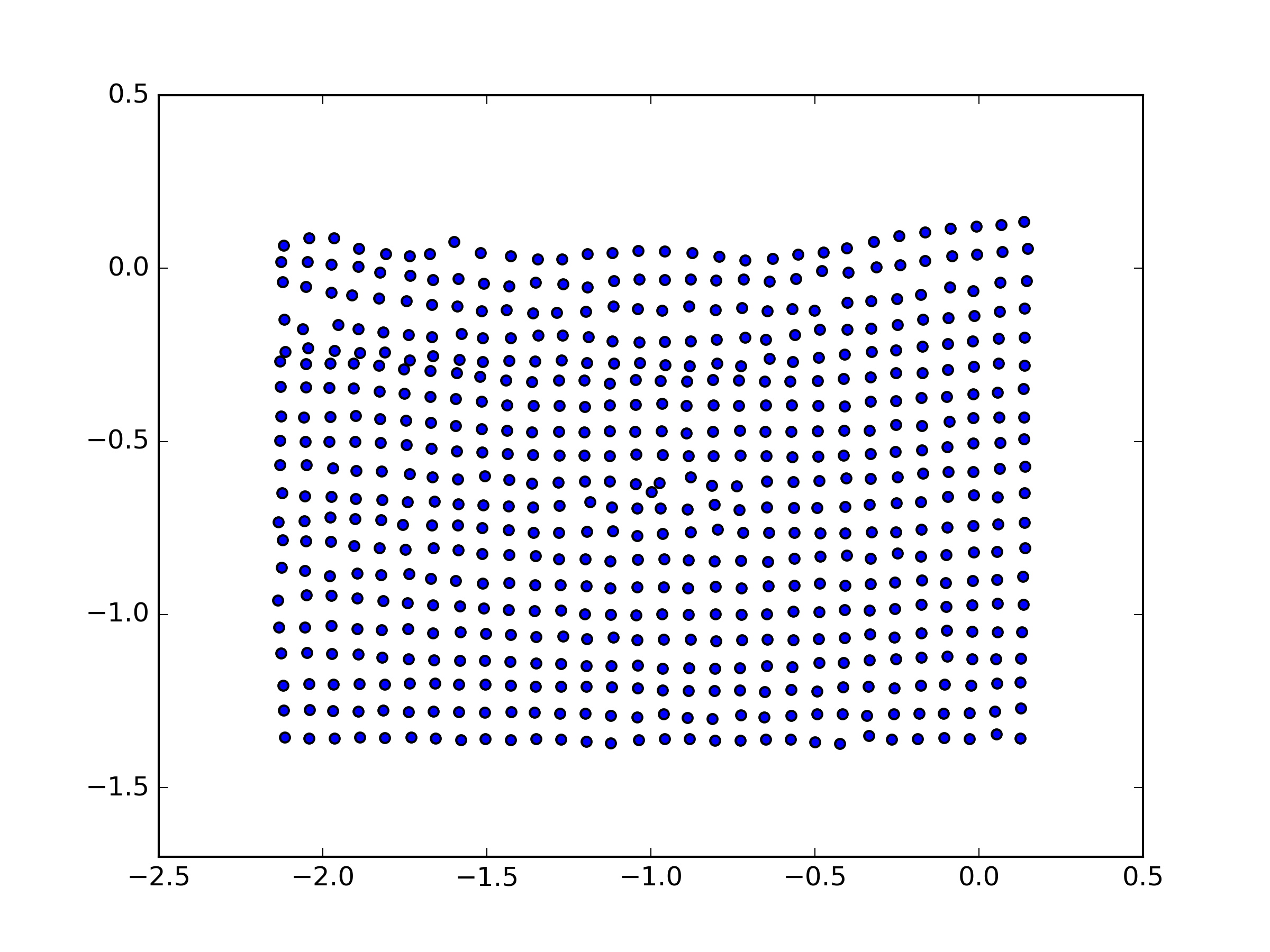

Through a process called “computational lightfield capture” hundreds of images are recorded over time and from different points in space. This allows Rover to record all light incident through a space, as you might imagine capturing light passing through a window. Later, through an algorithmic manipulation of the recorded light, Rover is able to re-synthesize space. In doing so Rover chooses what (and when) to observe from the scene after the fact, and can revisit the scene, following a new path through it ad infinitum.

The system used to capture the images consists of custom electronics and an embedded computer mounted to a robotic plotter. It explores the world before it while being bounded physically by a system of belts and motors which delimit its 2-dimensional “window”. It strains outwards from this window into space it cannot readily inhabit: our space.

While looking, Rover is also listening. It records and extracts audio using machine-listening techniques, retrieving sounds we would otherwise dismiss as noise. Upon revisiting captured moments, just as images are ceaselessly navigated by the system, sounds are revisited and reshaped until they are no longer commonplace.

What Rover understands as light data (lightfields), we understand as familiar spaces: a doorway into a room, a place where someone once rested, an open book left on a table. As the installation roves through lightfields, it is synthesizing for us dreamlike views of the spaces and scenes it has previously inhabited.

Please note: If there is interest, we would be excited to lead a workshop on the techniques we use to generate imagery for Rover!

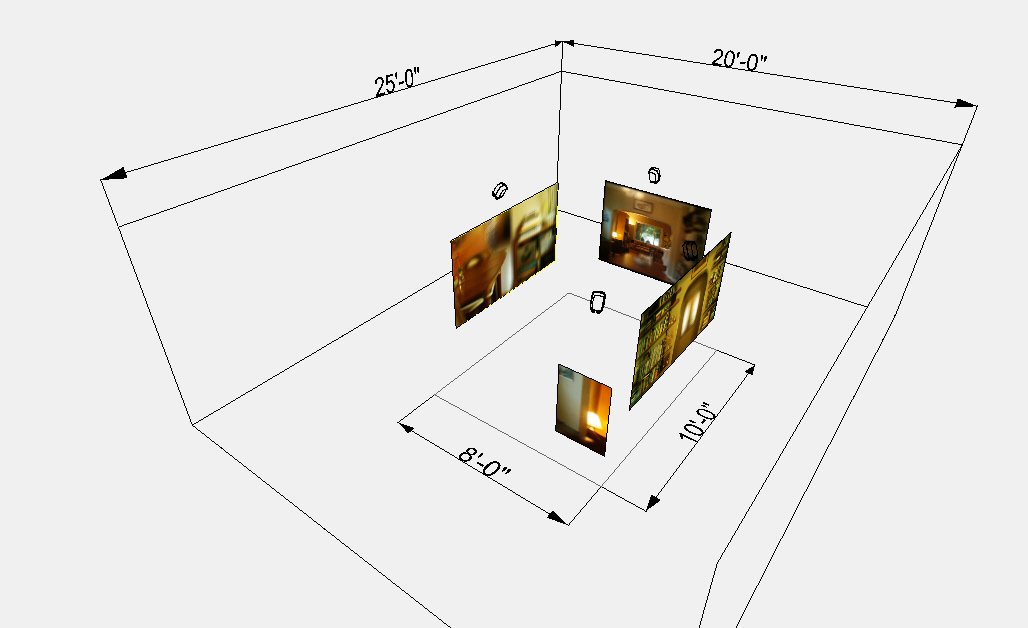

Installation Plans for Currents 2016

Please note: this floor plan anticipates a dim to dark viewing environment with little-to-no competing sound from outside the installation. The dimensions listed in the floor plan are suggested but variable. The important aspect of the installation dimensions is that the area enclosed by the screen be roughly 8’x10’ and that there is at least a ten-foot perimeter surrounding the screens.

Projectors and speakers are ceiling mounted. Speakers are at a height of 8-10 feet off the ground (approx. two to three feet above the projection screens).

Please note: Equipment requests are made in the interest of minimizing the risk and cost of shipping equipment from Seattle, WA. If the festival is unable to provide the requested equipment, the artists can pursue other resources to procure the equipment as needed.

Equipment provided by the artists:

- System audio and video playback computers.

- Four (4) Double-sided/rear projection screens (ROSEBRAND 55” grey PVC screen), with mounting assembly hardware.

Equipment kindly requested of the festival hosts:

- Four (4) HD projectors suitable for rear projection, given the ambient light conditions of the installation.

- Four (4) high-quality speakers (reference Genelec 8030).

- One (1) multi-channel sound card (4+ channels of output, e.g. MOTU UltraLite).

- Audio and video cabling to playback devices.

Rover was presented at the Black Box 2.0 Festival, May 28 — June 7 2015.

The imagery gathered for this iteration of Rover was captured with a custom mechatronic light field capture system designed to be portable and scalable according to the framing and depth required for each scene.

By gathering hundreds of images in a structured way, we are able to create a synthetic camera “aperture” which allows us to resynthesize a scene after the fact, re-focusing, obscuring and revealing points of interest in real-time. The result is a non-linear hybrid between photography and video.

In a somewhat analogous process, audio is recorded at the site of each light field capture and analyzed to find events and textures of interest through an audio classification system called Music Information Retrieval. Based on the features discovered in the recordings, sonic moments or textures which may have otherwise gone unnoticed are exposed and recomposed in concert with the visual system.

Some of the techniques and technologies used include:

- Music Information Retrieval for audio classification (using SCMIR by Nick Collins)

- K-means clustering for ordering sound according to self-similarity

- Visual Structure From Motion for gathering images locations and rectifying all images to a common image plane

- Custom software driving the resynthesis of the light field scenes (via OSC from SuperCollider)

- A real-time audio granulation software written in SuperCollider